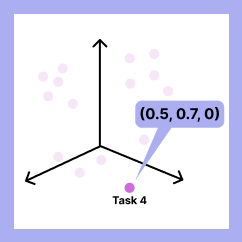

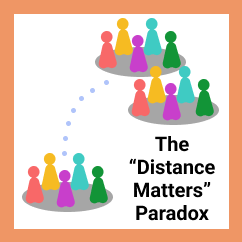

Research on teams spans many diverse contexts, but integrating knowledge from heterogeneous sources is challenging because studies typically examine different tasks that cannot be directly compared. Most investigations involve teams working on just one or a handful of tasks, and researchers lack principled ways to quantify how similar or different these tasks are from one another. We address this challenge by introducing the “Task Space,” a multidimensional framework that represents tasks along 24 theoretically-motivated dimensions. To demonstrate its utility, we apply the Task Space to a fundamental question in teams research: when do interacting groups outperform individuals? Using the Task Space to systematically sample 20 diverse tasks, we conducted an integrative experiment with 1,231 participants working at three complexity levels, either individually or in groups of three or six (180 experimental conditions). We find striking heterogeneity in group advantage, with groups performing anywhere from three times worse to 60% better than the best individual working alone, depending on the task context. Task Space dimensions significantly outperform traditional typologies in predicting group advantage on unseen tasks. Additionally, our models reveal theoretically meaningful interactions between task features; for example, group advantage on creative tasks depends on whether the answers are objectively verifiable. The Task Space ultimately enables researchers to move beyond isolated findings to identify boundary conditions and build cumulative knowledge about team performance.